- Introduction: The History of Artificial Intelligence

- The Seeds: Thinking About Thinking

- The Birth of a Field: Dartmouth, 1956

- Symbolic AI and the First AI Winter

- Neural Networks, Probabilistic Methods, and Revival

- Deep Blue and a Return to the Spotlight

- The Deep Learning Revolution

- Reinforcement Learning and AlphaGo

- The Era of Scale and Generative AI

- AI’s Applications and Social Impact

- Research Frontiers: Safety and Alignment

- Conclusion: The History of Artificial Intelligence

Introduction: The History of Artificial Intelligence

Artificial intelligence (AI) has grown from a philosophical idea into one of the most transformative technologies of the modern era. Once the subject of speculation in science fiction novels and academic debates, AI is now a central force driving economic, cultural, and technological change. It underpins everyday applications like search engines, recommendation systems, fraud detection, and language translation. The history of AI is not linear; it features cycles of optimism, periods of disillusionment, and bursts of revolutionary progress. Understanding this history helps us see how AI evolved, why it matters today, and where it might take us tomorrow. Now, lets learn more about the history of artificial intelligence.

The Seeds: Thinking About Thinking

Before computers, philosophers and mathematicians studied whether human thought could be formalized into logic and rules. Thinkers such as Aristotle and Descartes pondered the nature of intelligence and reasoning. By the 19th century, George Boole and Gottlob Frege developed symbolic logic, laying the groundwork for representing reasoning mathematically. Later, advances in mathematical logic, such as Kurt Gödel’s incompleteness theorems, highlighted both the possibilities and limitations of formal systems. These ideas suggested that reasoning could, at least in part, be reduced to structured processes. This line of thought inspired early computer scientists to ask whether machines could replicate mental processes.

Alan Turing’s 1950 paper, Computing Machinery and Intelligence1, pushed the conversation from theory to practice. He asked whether machines could think and, more importantly, how one might test this. His proposed “imitation game,” later called the Turing Test, became an iconic way of evaluating machine intelligence. The test shifted the focus from abstract speculation to empirical evaluation: could a machine convincingly mimic human responses? This question guided much of the early AI research and remains influential today.

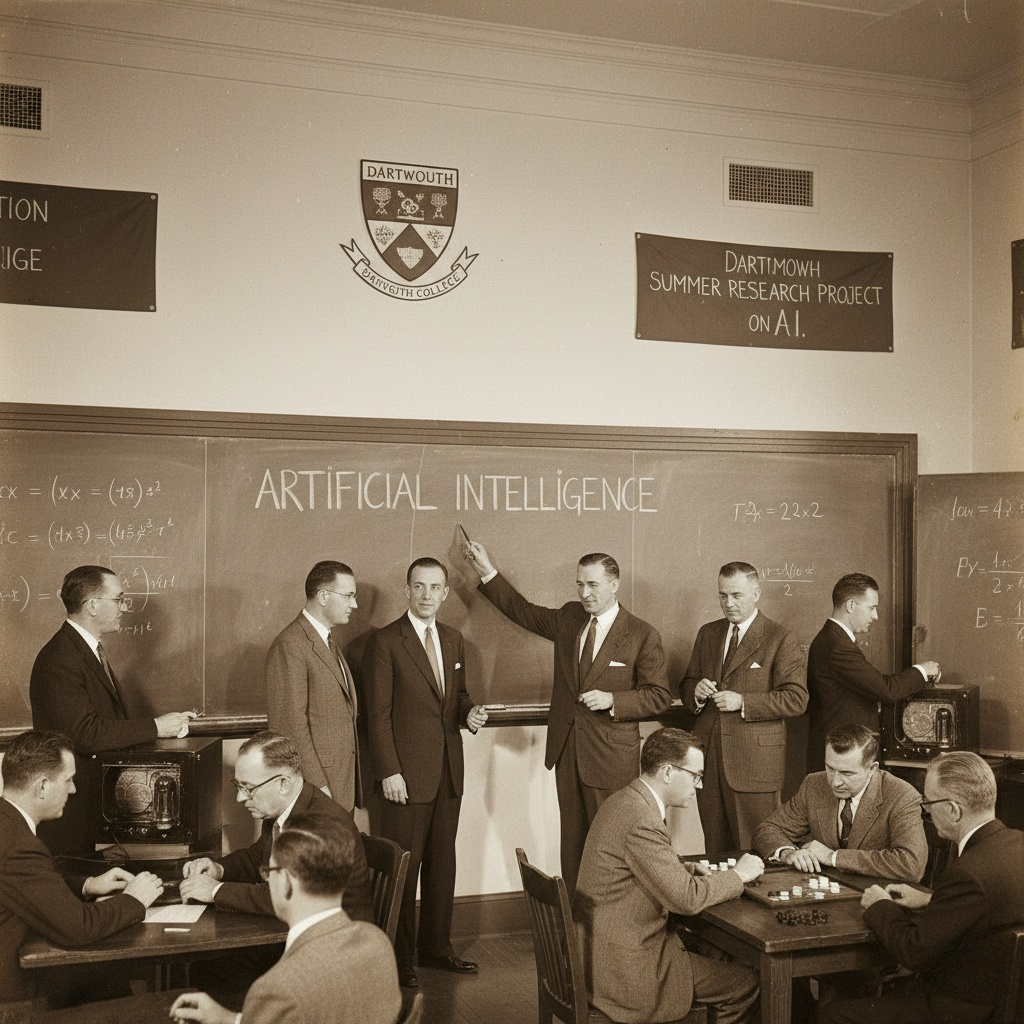

The Birth of a Field: Dartmouth, 1956

A summer workshop at Dartmouth College in 19562 is widely regarded as the birthplace of AI as an academic discipline. Organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester, the workshop brought together leading thinkers to define the scope of the new field. They coined the term “artificial intelligence” and outlined ambitious goals, asserting that every aspect of learning and intelligence could be described and simulated in machines3. This was a bold declaration that shaped decades of research agendas.

The optimism of Dartmouth fueled a wave of early projects. Researchers built programs that could solve algebra problems, prove mathematical theorems, and play simple games like checkers. These early successes generated enthusiasm that human-level AI might soon be achieved. Governments and institutions invested heavily in research. While these early systems were limited by today’s standards, they gave credibility to the idea that intelligence could be engineered.

Symbolic AI and the First AI Winter

Symbolic AI, or “good old-fashioned AI” (GOFAI), dominated the 1960s and 1970s. Researchers hand-coded logic and rules to represent knowledge, building systems that could reason about narrow, well-defined domains. One prominent branch was expert systems, which attempted to replicate expert knowledge in fields such as medicine or geology. These systems proved valuable for structured decision-making tasks and were adopted by industries in the 1970s and 1980s.

However, symbolic AI struggled with real-world complexity. Encoding knowledge was time-consuming, and systems could not handle uncertainty, ambiguity, or perception effectively. Language understanding and common-sense reasoning proved especially difficult. As expectations failed to match reality, funding declined, and AI entered its first “winter.” Research slowed, projects were canceled, and many scientists shifted their focus to other fields. The cycle of hype and disappointment became a defining feature of AI’s history.

Neural Networks, Probabilistic Methods, and Revival

While symbolic approaches dominated, alternative methods were quietly advancing. Neural networks, inspired by the brain, were first proposed in the 1940s but gained traction in the 1980s. The invention of backpropagation enabled multilayer networks to learn from data, reigniting interest in connectionism. These models could detect patterns in images, speech, and text, though their scale was still small.

At the same time, probabilistic models emerged as powerful tools for handling uncertainty. Bayesian networks, Hidden Markov Models, and other statistical methods enabled AI to work with incomplete and noisy data. They proved particularly successful in speech recognition, natural language processing, and robotics. These methods broadened AI’s reach and laid the foundation for data-driven approaches that dominate today. This era showed that learning from data, rather than encoding rules, could solve practical problems.

Deep Blue and a Return to the Spotlight

In 1997, IBM’s Deep Blue defeated world chess champion Garry Kasparov. The match marked a turning point for public perception of AI. Chess, long considered a benchmark for intelligence, was now mastered by a machine. Deep Blue combined brute-force search with extensive knowledge of chess strategies, showcasing the power of computation and domain expertise.

Though chess is a closed system with clear rules, the event was symbolic. It demonstrated that machines could outperform humans in complex intellectual games. For many, it validated AI’s potential after years of skepticism. Still, researchers recognized the limitations: winning at chess did not mean mastering everyday reasoning or creativity. The victory highlighted both the promise and boundaries of AI at the time.

The Deep Learning Revolution

The early 2010s sparked a revolution in AI through deep learning. In 2012, AlexNet4, a convolutional neural network, dominated the ImageNet competition by drastically reducing error rates in image recognition. This breakthrough was fueled by access to massive datasets, faster graphics processing units (GPUs), and improved training techniques. It showed that deep neural networks could outperform traditional approaches by automatically learning complex features from raw data.

Following this success, deep learning spread rapidly across fields. Speech recognition improved dramatically, powering voice assistants like Siri and Google Assistant. Natural language processing advanced, enabling translation systems and chatbots. Computer vision applications, from medical imaging to autonomous vehicles, flourished. Deep learning became the backbone of modern AI, shifting the field away from hand-crafted features toward data-driven representation learning.

Reinforcement Learning and AlphaGo

Reinforcement learning (RL), which trains agents through rewards and penalties, combined with deep learning to produce striking achievements. In 2016, DeepMind’s AlphaGo defeated Go champion Lee Sedol5. Go had long been considered beyond the reach of machines due to its immense complexity and intuitive strategies. AlphaGo used deep neural networks to evaluate board states and guide search, blending learning and planning in new ways.

The victory was historic. It demonstrated that machines could achieve superhuman performance in domains requiring intuition, strategy, and creativity. Later versions, such as AlphaGo Zero, learned entirely through self-play, surpassing earlier systems without human data. These breakthroughs inspired new research into reinforcement learning, control systems, and decision-making tasks. However, they also raised questions about generalization: systems trained for specific games did not easily transfer their skills to broader tasks.

The Era of Scale and Generative AI

A defining trend of recent years is the scaling of models. Researchers discovered that increasing the size of neural networks, datasets, and compute power consistently improved performance. This insight led to the rise of large language models (LLMs) like GPT, which can generate coherent text, translate languages, and answer questions with surprising fluency.

Generative AI expanded beyond text to images, audio, and video. Models like DALL·E and Stable Diffusion can create photorealistic images from prompts, while others generate music or code. These systems revealed emergent capabilities, where new skills appeared as models grew larger. Yet they also exposed challenges: bias, misinformation, hallucination, and ethical concerns about authorship. Generative AI represents both a powerful creative tool and a disruptive force in many industries.

AI’s Applications and Social Impact

AI’s applications now span nearly every sector. In healthcare, AI assists radiologists in detecting tumors and predicting patient outcomes. In finance, it powers fraud detection and algorithmic trading. Retailers use recommendation systems to personalize shopping experiences, while logistics companies optimize supply chains with predictive analytics. Transportation benefits from driver-assistance systems and autonomous vehicle research. Education platforms employ AI to tailor learning to students’ needs.

The impact is profound but uneven. Some workers benefit from new tools, while others face displacement due to automation. AI raises concerns about privacy, surveillance, and the spread of disinformation. Ethical dilemmas arise in areas such as predictive policing and military applications. As AI grows more capable, balancing innovation with accountability becomes critical. Governments, businesses, and civil society must navigate how to use AI responsibly.

Research Frontiers: Safety and Alignment

Modern AI research increasingly emphasizes safety, transparency, and alignment with human values. Interpretability research seeks to make complex models understandable so their decisions can be trusted. Safety work explores how to prevent unintended consequences, such as biased predictions or unsafe behavior in autonomous systems. Alignment studies aim to ensure AI systems pursue goals consistent with human ethics and societal well-being.

Beyond technical solutions, governance and regulation are gaining importance. International organizations and governments are drafting frameworks to manage AI’s risks while promoting innovation. Industry leaders collaborate on ethical guidelines and best practices. These efforts recognize that AI is not only a technical challenge but also a societal one. How AI is deployed will shape economies, politics, and daily life for decades to come.

Conclusion: The History of Artificial Intelligence

The future of AI is uncertain yet full of potential. Some expect steady, incremental progress, while others predict transformative breakthroughs that could rival the Industrial Revolution in impact. Emerging research into general AI, neuromorphic computing, and hybrid systems combining symbolic and statistical methods could open new directions. Questions of control, accountability, and equitable access will remain central.

One constant is that AI will continue to reshape how we work, learn, and interact. It will likely become more integrated into healthcare, climate science, and creative industries. The challenge lies in steering this technology toward outcomes that benefit humanity broadly, rather than concentrating power or exacerbating inequality. Thoughtful governance, collaboration, and ethical foresight will be essential in guiding AI’s next chapters.